In the last couple years I’ve had little reason to use Windows other than when I get the urge to play video games. Given that it often feels somewhat excessive to have a machine solely for this purpose. I could sacrifice a machine to dual boot but the only suitable one I have, an ageing AMD 1920X box, currently serves as a ESXi host running a number of long lived services.

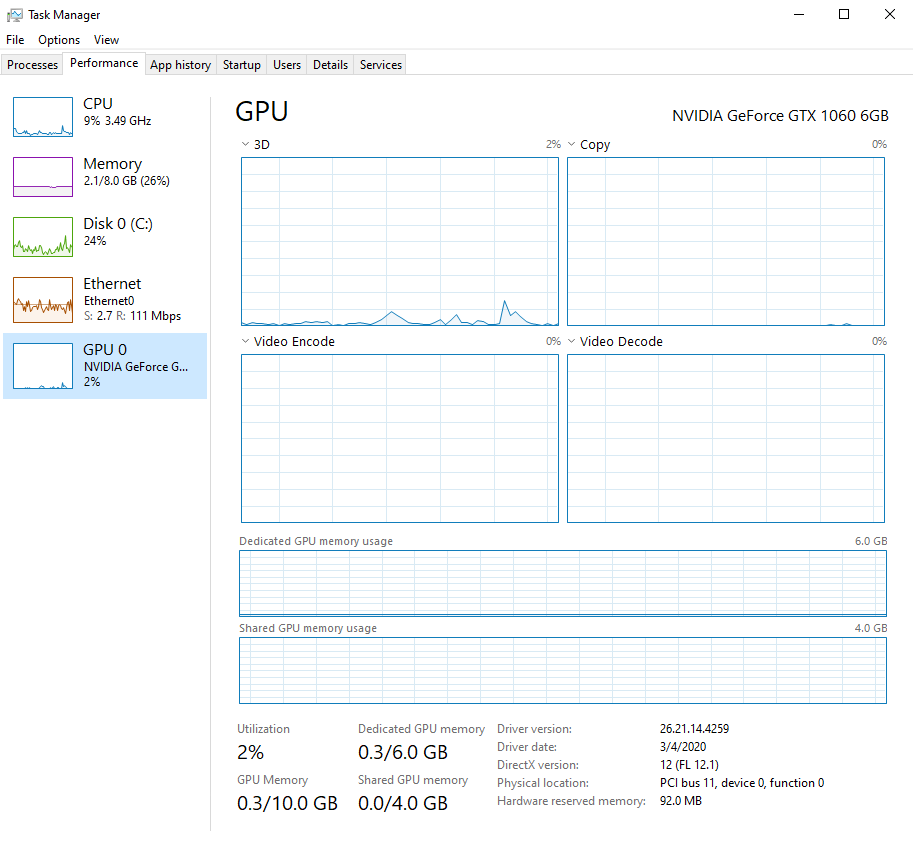

The answer seems obvious, virtualize Windows via ESXi. I would’ve done this long ago, but I’ve always had issues trying to get PCI passthrough working in order to get a dedicated GPU for Windows to consume (side note: you might think that virtualizing Windows would introduce enough latency to make playing video games unbearable, but it turns out it’s actually perfectly fine, especially if you can provide the VM with direct-ish access to a GPU). After reading a lot of random threads across the internet I recently got it all working and am now able to play video games to my hearts extent on the same metal that is running my various development services.

In case others are interested in giving this a go without scouring the internet for scraps of advice, here are the steps that eventually worked for me (these are mostly specific to Nvidia GPUs).

- Enable passthrough for all PCI sub-devices on your GPU, my card only provides a video and audio device but others may have a whole host of random things that are expected to all be able to talk to each other.

- Enable the proper reset modes for the devices in the

/etc/vmware/passthru.map. For the Nvidia card I’m usingd3d0power management seems to do the trick, which also required disabling the default Nvidiabridgewildcard entry. You can learn the PCI vendor/device IDs usinglspci -n. - Add all the PCI devices to your Windows VM and disable MSI in order to force the driver to use INTx as recommended by Nvidia. This is done by setting

pciPassthruN.msiEnabledtofalsefor each device (whereNis the device number for the VM). - Set

hypervisor.cpuid.v0tofalse. This prevents ESXi reporting to the VM that it’s… well… a VM. This seems necessary as the Nvidia GPU drivers don’t seem to like to run on a virtualized machine (there is speculation that this is because Nvidia wants people to buy their datacenter specific Quadro cards rather than using consumer cards in servers). - Disable the virtualized SVGA adapter by setting

svga.presenttofalse. This may not be strictly necessary, but I found that keeping it enabled caused increased latency when trying to do anything graphically taxing.

And that’s it. While trying to get this all working I ran across a number of other random suggestions, but after slowly whittling them down this ended up being all I really needed. Now I can play Counter Strike to my hearts content, what a world we live in!